OpenSUSE Tumbleweed has it. The Fedora 40 beta has it. Its just a result of being bleeding edge. Arch doesn’t have exclusive rights to that.

I use arch btw

Uhh? Good for you?

Thank you

It’s a double edged sword, fastest patches and fastest exploits.

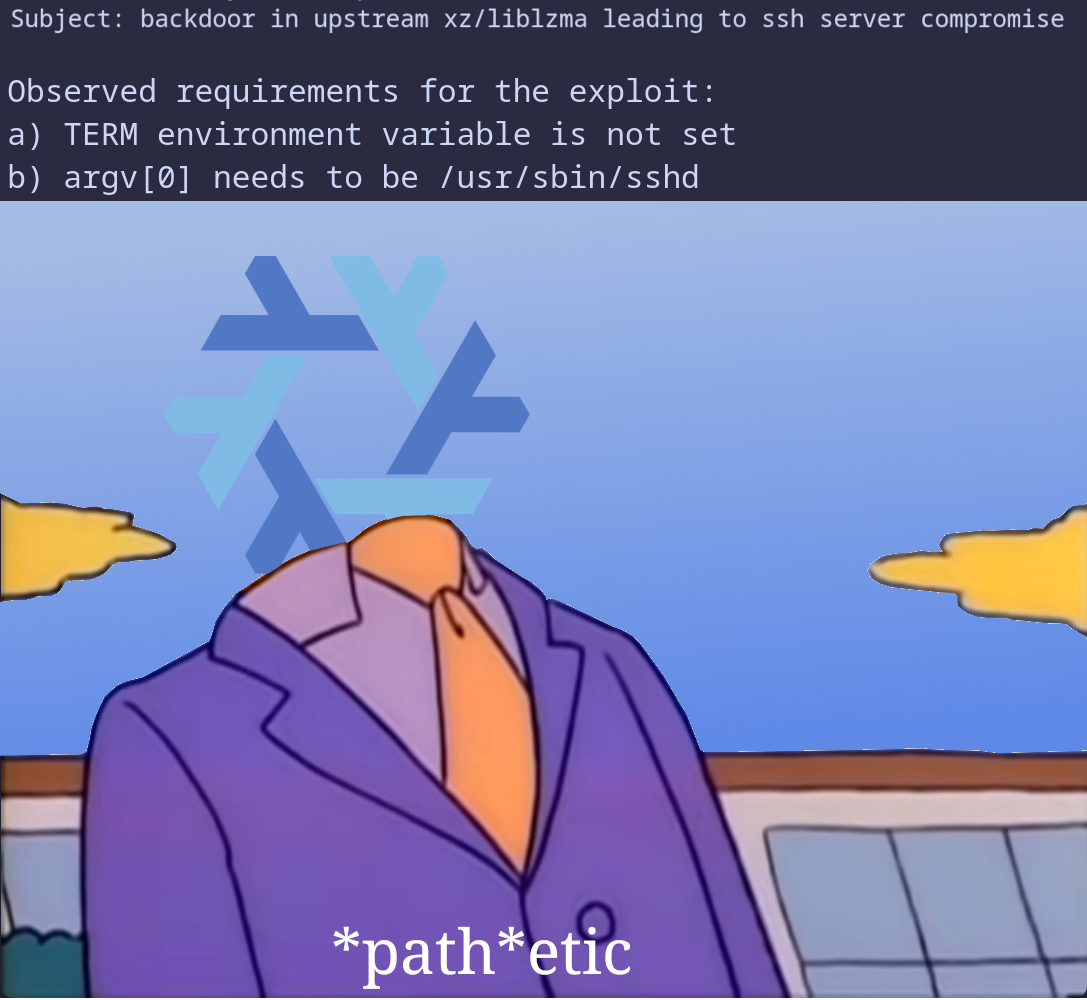

Incorrect: the backdoored version was originally discovered by a Debian sid user on their system, and it presumably worked. On arch it’s questionable since they don’t link

sshdwithliblzma(although some say some kind of a cross-contamination may be possible via a patch used to support some systemd thingy, and systemd usesliblzma). Also, probably the rolling opensuse, and mb Ubuntu. Also nixos-unstalbe, but it doesn’t pass theargv[0]requirements and also doesn’t linkliblzma. Also, fedora.Sid was that dickhead in Toystory that broke the toys.

If you’re running debian sid and not expecting it to be a buggy insecure mess, then you’re doing debian wrong.

Fedora and debian was affected in beta/dev branch only, unlike arch

Unlike arch that has no “stable”. Yap, sure; idk what it was supposed to mean, tho.

Yes, but Arch, though it had the compromised package, it appears the package didn’t actually compromise Arch because of how both Arch and the attack were set up.

I thought Arch was the only rolling distro that doesn’t have the backdoor. Its sshd is not linked with liblzma, and even if it were, they compile xz directly from git so they wouldn’t have gotten the backdoor anyway.

TBF they only switched to building from git after they were notified of the backdoor yesterday. Prior to that, the source tarball was used.

liblzma is the problem. sshd is just the first thing they found that it is attacking. liblzma is used by firefox and many other critical packages.

Arch does not directly link openssh to liblzma, and thus this attack vector is not possible. You can confirm this by issuing the following command:

ldd "$(command -v sshd)"Yes, this sshd attack vector isn’t possible. However, they haven’t decomposed the exploit and we don’t know the extent of the attack. The reporter of the issue just scratched the surface. If you are using Arch, you should run pacman right now to downgrade.

They actually have an upgrade fix for it, at least for the known parts of it. Doing a standard system upgrade will replace the xz package with one with the known backdoor removed.

If you are using Arch, you should run pacman right now to downgrade.

No, just update. It’s already fixed. Thats the point of rolling release.

Bold of you to assume I hare upgraded in the first place.

I switched it with 5.4, just in case.

Do not use ldd on untrusted binaries.

I executed the backdoor the other day when assessing the damage.

objdump is the better tool to use in this case.

Interestingly, looking at Gentoo’s package, they have both the github and tukaani.org URLs listed:

https://github.com/gentoo/gentoo/blob/master/app-arch/xz-utils/xz-utils-5.6.1.ebuild#L28

From what I understand, those wouldn’t be the same tarball, and might have thrown an error.

The extent of the exploit is still being analyzed so I would update and keep your eye on the news. If you don’t need your computer you could always power down.

It is not entirely clear either this exploit can affect other parts of the system. This is one those things you need to take extremely seriously

In the case of Arch the backdoor also wasn’t inserted into liblzma at all, because at build time there was a check to see if it’s being built on a deb or rpm based system, and only inserts it in those two cases.

See https://gist.github.com/thesamesam/223949d5a074ebc3dce9ee78baad9e27 for an analysis of the situation.

So even if Arch built their xz binaries off the backdoored tarball, it was never actually vulnerable.

I just know there is a lot of uncertainty. Maybe a complete wipe is a over reaction but it is better to be safe

most stable

How the hell is arch more stable than Debian?

i think it’s a matter of perspective. if i’m deploying some containers or servers on a system that has well defined dependencies then i think Debian wins in a stability argument.

for me, i’m installing a bunch of experimental or bleeding edge stuff that is hard to manage in even a non LTS Debian system. i don’t need my CUDA drivers to be battle tested, and i don’t want to add a bunch of sketchy links to APT because i want to install a nightly version of neovim with my package manager. Arch makes that stuff simple, reliable, and stable, at least in comparison.

“Stable” doesn’t mean “doesn’t crash”, it means “low frequency of changes”. Debian only makes changing updates every few years, and you can wait a few more years before even taking those changes without losing security support while Arch makes changing updates pretty much every time a package you have installed does.

In no way is Arch more stable than Debian (other than maybe Debian Unstable/Sid, but even then it’s likely a bit of a wash)

If you are adding sources to Debian you are doing it wrong. Use flatpak or Distrobox although distrobox is still affected

Just Arch users being delusional. Every recent thread that had Arch mentioned in the comments has some variation of “Arch is the most stable distro” or “Stable distros have more issues than Arch”.

Old does not mean stable

It literally does though. Stable doesn’t mean bug free. It means unchanging. That’s what the term “stable distro” actually means. That the software isn’t being updated except for security patches. When people say stable distro, that is what they are trying to communicate. That means the software will be old. That’s what stable actually means.

Stable means stable

Stable is the building horses are kept in

True, but it is also out of reach of Arch users

The floor is covered in horse shit. Sounds like Arch to me!

(I kid, I kid… I run arch btw)

In my experience they’re the same from a reliability standpoint. Stuff on Arch will break for no reason after an update. Stuff on Debian will break for no reason after an update. It’s just as difficult to solve reliability problems on both.

Because Debian isn’t a rolling release you will often run into issues where a bug got fixed in a future version of whatever program it is but not the one that’s available in the repository. Try using yt-dlp on any stable Debian installation and it won’t work for example.

Arch isn’t without its issues. Half of the good stuff is on the AUR, and fuck the AUR. Stuff only installs without issues half the time. Good luck installing stuff that needs like 13+ other AUR packages as dependencies because non of that shit can be installed automatically. On other distros,all that stuff can be installed automatically and easily with a single command.

I use Arch btw.

I have never had anything break on Debian. It has been running for years on attended upgrades

I’ve had the exact opposite experience. I switched to Arch when proton came out, and I haven’t had a system breakage since that wasn’t directly caused by my actions.

Debian upgrades would basically fail to boot about 20% of the time before that.

I have never had anything break on Debian.

I use Arch btw.

You can get yay for an AUR package manager, but it’s generally not recommended because it means blindly trusting the build scripts for community packages that have no real oversight. You’re typically advised to check the build script for every AUR package you install.

Stuff on Debian will break for no reason after an update

I have never had this happen on Debian servers and I’ve been using it for around 20 years. The only time I broke a Debian system was my fault - I tried to upgrade an old server from Debian 10 to 12. It’s only supported to upgrade one version at a time. Had to restore from backup and upgrade to Debian 11 first, then to 12.

deleted by creator

I heard this so many times that I really believed arch was so brittle that my system would become unbootable if I went on vacation. Turns out updating it after 6 months went perfectly fine.

I once updated an Arch that was 2y out of date, and it went perfectly fine.

But didn’t it take a while? Not that it wouldn’t take a while on Debian but Debian doesn’t push so many updates

Not really. It’d just skip all the incremental updates and go straight to latest.

It took a bit more than usual but nothing unreasonable. 3 to 5 minutes at most, in an old MacBook pro.

I updated arch after two months and it broke completely, i guess it’s because i had unfathomable amount of packages and dependencies, so it varies from person to person, if you keep your system light then it may work like it worked for you, if you install giant amount of packages and dependencies then it would work like it worked for me

Arch is not vulnerable to this attack vector. Fedora Rawhide, OpenSUSE Tumbleweed and Debian Testing are.

Notice normal distros aren’t affected

tf is a normal distro?

Distros that have some sort of testing before hitting users. Arch also had the issue of killing Intel laptop displays not to long ago as well.

Maybe using the term “normal distro” is a bit of a stretch but my point is that testing is good.

Arch has regular mirrors and testing mirrors, most users use the regular ones.

In this context, I’m going to assume they mean “non-rolling-release”

Non betas/testing probably?

Windows

Arch has already updated XZ by relying on the source code repository itself instead of the tarballs that did have the manipulations in them.

It’s not ideal since we still rely on a potentially *otherwise* compromised piece of code still but it’s a quick and effective workaround without massive technical trouble for the issue at hand.

instead of the tarballs that did have the manipulations in them

My only exposure to Linux is SteamOS so I might be misunderstanding something, but if not:

How in the world did it get infected in the first place? Do we know?

From what I read it was one of the contributors. Looks like they have been contributing for some time too before trying to scooch in this back door. Long con.

It seems like this contributor had malicious intent the entire time they worked on the project. https://boehs.org/node/everything-i-know-about-the-xz-backdoor

Wow. That is some read.

edit:I keep thinking my jaw can’t go any closer to the floor but I keep reading and my jaw keeps dropping.

HOLY COW!

Basically, one of the contributors that had been contributing for quite some time (and was therefore partly trusted), commited a somewhat hidden backdoor. I doubt it had any effect (as it was discovered now before being pushed to any stable distro and the exploit itself didnt work on Arch) bjt we’ll have to wait for the effect to be analyzed.

There are no known reports of those versions being incorporated into any production releases for major Linux distributions, but both Red Hat and Debian reported that recently published beta releases used at least one of the backdoored versions […] A stable release of Arch Linux is also affected. That distribution, however, isn’t used in production systems.

Ouch

Also,

Arch is the most stable

Are you high?

I think the confusion comes from the meaning of stable. In software there are two relevant meanings:

-

Unchanging, or changing the least possible amount.

-

Not crashing / requiring intervention to keep running.

Debian, for example, focuses on #1, with the assumption that #2 will follow. And it generally does, until you have to update and the changes are truly massive and the upgrade is brittle, or you have to run software with newer requirements and your hacks to get it working are brittle.

Arch, for example, instead focuses on the second definition, by attempting to ensure that every change, while frequent, is small, with a handful of notable exceptions.

Honestly, both strategies work well. I’ve had debian systems running for 15 years and Arch systems running for 12+ years (and that limitation is really only due to the system I run Arch on, rather than their update strategy.

It really depends on the user’s needs and maintenance frequency.

- Not crashing / requiring intervention to keep running.

The word you’re looking for is reliability, not stability.

Both are widely used in that context. Language is like that.

Let me try that: “my car is so stable, it always starts on the first try”, “this knife is unstable, it broke when I was cutting a sausage”, “elephants are very reliable, you can’t tip them over”, “these foundations are unreliable, the house is tilting”

Strange, it’s almost like the word “stability” has something to do with not moving or changing, and “reliability” something to do with working or behaving as expected.

Languages generally develop to be more precise because using a word with 20 different meanings is not a good idea. Meanwhile, native English speakers are working hard to revert back to cavemen grunts, and so now for example “literally” also means “metaphorically”. Failing education and a lacking vocabulary are like that.

Amazingly, for someone so eager to give a lesson in linguistics, you managed to ignore literal definitions of the words in question and entirely skip relevant information in my (quite short) reply.

Both are widely used in that context. Language is like that.

Further, the textbook definition of Stability-

the quality, state, or degree of being stable: such as

a: the strength to stand or endure : firmness

b: the property of a body that causes it when disturbed from a condition of equilibrium or steady motion to develop forces or moments that restore the original condition

c: resistance to chemical change or to physical disintegration

Pay particular attention to “b”.

The state of my system is “running”. Something changes. If the system doesn’t continue to be state “running”, the system is unstable BY TEXTBOOK DEFINITION.

Pay particular attention to “b”.

the property of a body that causes it … to develop forces or moments that restore the original condition

That reminds me more of a pendulum. Swing it, and it’ll always go back to the original, vertical, position because it develops a restoring moment.

The state of my system is “running”. Something changes. If the system doesn’t continue to be state “running", the system is unstable BY TEXTBOOK DEFINITION.

- That “something” needs to be the state of your system, not an update that doesn’t disturb its “steady running motion”

(when disturbed from a condition of equilibrium or steady motion). - Arch doesn’t restore itself back into a “running” condition. You need to fix it when an update causes the “unbootable” or any other different state instead of “running”. That’s like having to reset the pendulum because you swung it and it stayed floating in the air.

- What you’re arguing has more to do with “a”, you’re attributing it a strength to endure; that it won’t change the “running” state with time and updates.

I think the confusion comes from the meaning of stable. In software there are two relevant meanings:

I’m fascinated that someone that started off with this resists using two words instead of one this much. Let’s paste in some more definitions:

Cambridge Dictionary:

stability:

- a situation in which something is not likely to move or change

- the state of being firmly fixed or not likely to move or change

- a situation in which something such as an economy, company, or system can continue in a regular and successful way without unexpected changes

- a situation in which prices or rates do not change much

Debian is not likely to change, Arch will change constantly. That’s why we say Debian is stable, and Arch isn’t.

reliability:

- the quality of being able to be trusted or believed because of working or behaving well

- how well a machine, piece of equipment, or system works

- how accurate or able to be trusted someone or something is considered to be

You can and have argued that Arch is reliable.

- That “something” needs to be the state of your system, not an update that doesn’t disturb its “steady running motion”

-

A stable release of Arch Linux is

not a thing.

Ars uses AI now?

Bro WTF. How about you actually read up on the backdoor before slandering Arch. The backdoor DOES NOT affect Arch.

It has the freshest packages, ahead of all distros

Let me introduce you to Nixpkgs. Its packages are “fresher” than Arch’s by a large margin. Even on stable channels.

And the xz package?

As fresh as the CISA will allow it!

Not gonna lie, this whole debacle made want to switch to NixOS.

Immediately rolling back to an uncompromised version was my first thought.

That and the fact that each application is isolated from each other right? Should hopefully help in cases like this

nixos unstable had it too: https://github.com/NixOS/nixpkgs/pull/300028

I would still be cautious as the details of this exploit have not been ironed out

deleted by creator

what even is xz?

Very common compression utility for LZMA (.xz file)

Similar to .gzip, .zip, etc.

It’s definitely common, but zstd is gaining on it since in a lot of cases it can produce similarly-sized compressed files but it’s quicker to decompress them. There’s still some cases where xz is better than zstd, but not very many.

People doesn’t even know what

a rootkitXZ is, why should they care? -Sony CEO probably

Arch users are really just cannon fodder against supply chain attacks.

We’re the front line dog. Strike me down so Debian Stable’s legacy may live on.

void doesnt have it :3

I just did: “

rm -rf xz”pacman -Syu find / -name "*xz*" | sort | grep -e '\.xz$' | xargs -o -n1 rm -i pacman -Qqn | pacman -S -(and please, absolutely don’t run above as root. Just don’t.) I carefully answered to retain any root owned files and my backups, despite knowing the backdoor wasn’t included in the culprit package. This system has now “un-trusted” status, meaning I’ll clean re-install the OS, once the full analysis of the backdoor payload is available.

Edit: I also booted the “untrusted” system without physical access to the web, no gui, and installed the fixed package transferred to it locally. (that system is also going to be

dd if=/dev/zero'd)